NVIDIA's Groundbreaking AI Chips: The Future of Technology

Written on

The Foundation of AI: NVIDIA's Role

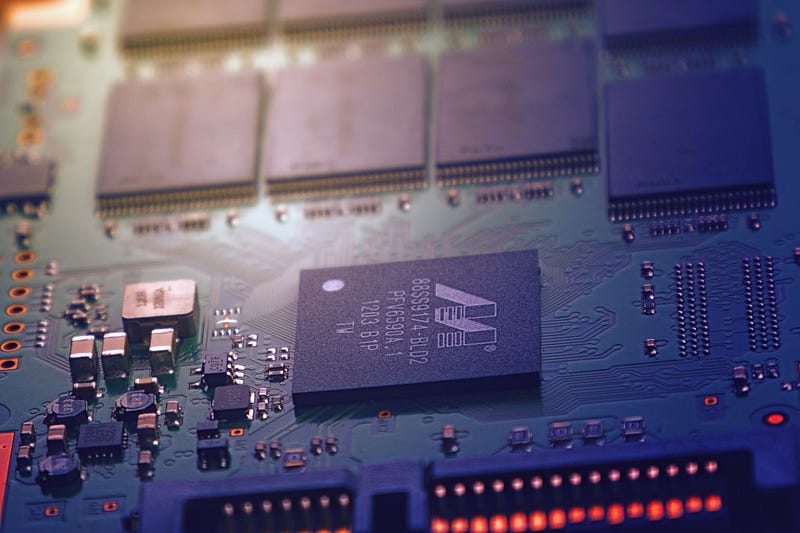

The evolution of Artificial Intelligence is significantly shaped by the quality of data provided to train algorithms. But what drives the impressive efficiency and speed of AI systems like ChatGPT? The answer lies in the deployment of advanced chips.

Following the introduction of ChatGPT in late 2022, NVIDIA revealed its latest chip family, the GPU H100. These chips are built with a staggering 80 billion transistors and feature a revolutionary processing engine known as the “Transformer Engine.” This engine is designed to accelerate the training of AI models, marking a pivotal advancement in the field.

With these enhancements, the adoption of AI technologies is set to soar, allowing for faster and more efficient processing of tasks.

The IT Industry's Demand for Superchips

As the capabilities of this technology become more apparent, the IT sector is eagerly looking forward to the integration of these revolutionary Superchips into their server architectures. Recent reports from the Financial Times highlight a trend where major industry players are scrambling to place orders for these innovative chips.

However, NVIDIA is struggling to meet the overwhelming demand. Production of these chips is projected to ramp up significantly by mid-2024, with an anticipated output of 1.5 million units, a dramatic increase from the 500,000 units expected in 2023.

The importance of servers equipped with AI capabilities is becoming increasingly clear. As technology evolves, the pace of innovation will accelerate, potentially driving up service costs.

Challenges of Upgrading Infrastructure

Despite the excitement surrounding these advancements, several challenges accompany the adoption of such powerful processing units. One notable issue is the increased energy requirements and heat generation associated with these chips, necessitating larger server racks. This shift will demand modifications in data center infrastructures and associated budget allocations.

The Future of AI Processing

The Superchip Revolution

NVIDIA has already introduced the GH200 Superchip, which features a combination of 72 ARM cores and 528 Tensor Cores, along with 480GB of LPDDR5X memory and 80GB of HBM3e memory. This chip is positioned to lead the industry in AI processing, particularly in natural language processing applications.

AI Processing in Mobile Devices

Currently, AI processing predominantly occurs in the cloud, with data sent from devices to servers for computation. However, future advancements may see AI capabilities integrated directly into mobile devices, enhancing user experience and functionality.

Energy-Efficient Innovations

In collaboration with MIT, NVIDIA is working on the Eyeriss project to develop energy-efficient chips utilizing new deep learning algorithms. These innovations aim to improve data processing capabilities while minimizing energy consumption.

As advancements in AI continue to unfold, significant breakthroughs are on the horizon. Researchers are relentlessly enhancing chip performance to facilitate faster and more efficient processing across various applications. Prepare for the transformative impact of AI!

The first video discusses NVIDIA's powerful new AI chip and its potential to disrupt the industry.

The second video explores the expansive implications of Nvidia's AI Foundations and how they are reshaping our understanding of AI technologies.